Roughly 460 million people (or over 5% of the global population) are deaf and depend on signing as their primary (and often the only) mode of communication. In total it is estimated that there are around 300 distinct sign languages in everyday use around the world.

Sign languages are not pantomimes or some sort of a poor replica of spoken languages, but are fully-fledged natural languages, having all the linguistic characteristics and properties one expects to find in any natural language.

The rich expressiveness of signing is best demonstrated by the artful storytelling, prose, poetry, and other literary artefacts produced by the deaf communities around the world. While sign languages are an effective form of communication within the deaf communities themselves, the deaf struggle to establish communication with hearing people, the majority of which have little to no knowledge of signing.

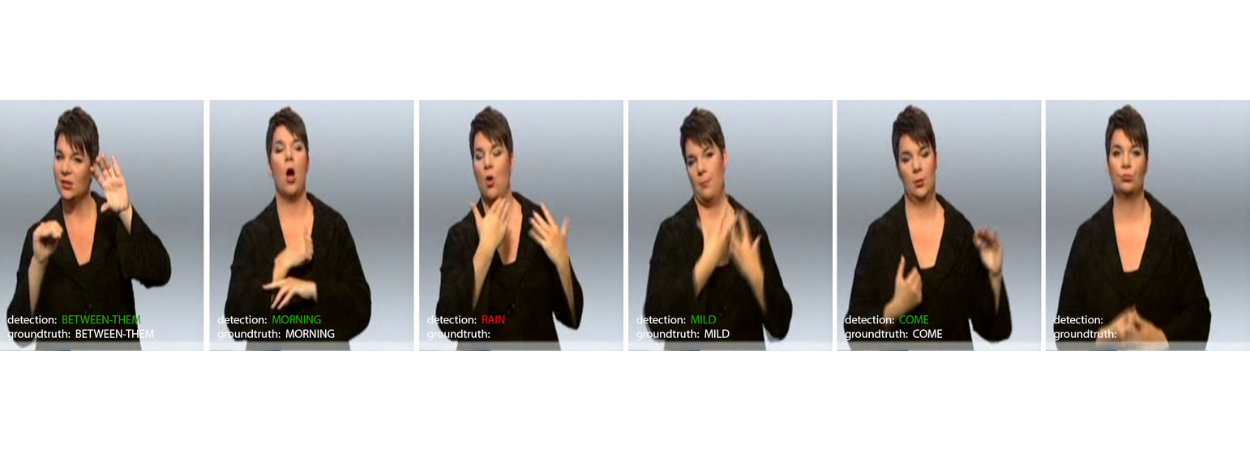

The research field of automated sign language recognition (ASLR) strives to create the needed assistive tools to bridge this communications gap between the deaf and the hearing world. The current state of the art in the field of ASLR is still limited in terms of recognition accuracy and sign language support. Unlike the case of speech recognition, there is still no effective commercially available automated sign language translators available. This is mainly due to the challenging nature of signing, both in terms of vision-based perception, as well as due to the complexities of the sign languages themselves.

Notwithstanding this, advancements in ASLR are progressing at a steady and encouraging pace, especially in recent years with the advent of deep learning, a type of machine learning involving deep neural networks. In our research work we leverage the recognition capabilities of recurrent deep neural networks, while at the same time addressing a number of limitations of the current state-of-the-art systems.

In contrast to the word model approach adopted by the large majority of the current systems, we adopt a sub-word model (subunit) approach, since this provides added advantages like requiring less training data, offering more robustness to new signs, and having the potential of opening access to the equally important non-lexical elements of signing. For generating the subunits themselves, we make use of a novel factorisation-based method that derives hand motion features embedded within a low-rank trajectory space.

We find that our novel choice of subunits are able to preserve semantic meaning, thus successfully addressing the issue of explainability, a problem prevalent in deep neural networks trained in an end-to-end fashion. Another advantage of our approach is that the subunits are transferable across different sign languages, which augurs well for sign languages with limited training corpora such as the Maltese sign language.

Mark Borg has just completed his PhD, supervised by Prof. Kenneth Camilleri from the Department of Systems and Control Engineering, with Prof. Marie Alexander from the Institute of Linguistics and Language Technology acting as Advisor. The work and results obtained throughout the course of the PhD studies were presented at the Sign Language Recognition, Translation and Production (SLRTP) workshop, held on 23 August 2020, under the auspices of the European Conference on Computer Vision (ECCV). More details can be found in the publication accessible from the SLRTP website.